OpenAI Says Boy’s Death Was His Own Fault for Using ChatGPT Wrong

The boy’s family’s lawyer called the response “disturbing.”

Published Nov 26, 2025 11:49 AM EST

Content warning: this story includes discussion of self-harm and suicide. If you are in crisis, please call, text or chat with the Suicide and Crisis Lifeline at 988, or contact the Crisis Text Line by texting TALK to 741741.

OpenAI has shot back at a family that’s suing the company over the suicide of their teenage son, arguing that the 16-year-old used ChatGPT incorrectly and that his tragic death was his own fault.

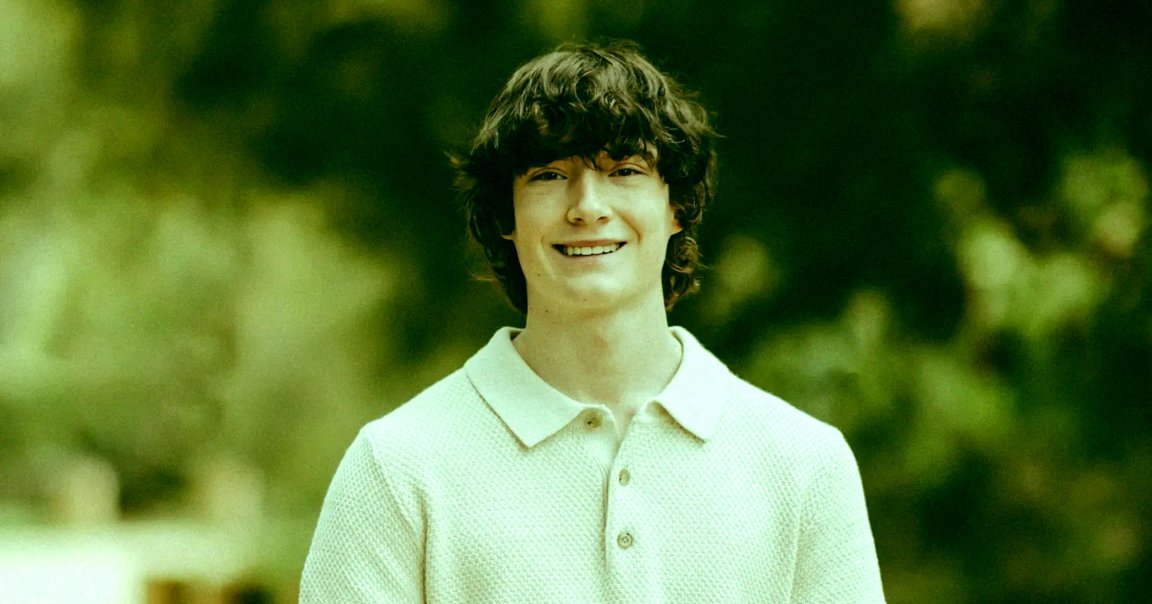

The family filed the lawsuit in late August, arguing that the AI chatbot had coaxed their son Adam Raine into killing himself.

Now, in a legal response filed in a California court this week, OpenAI has broken its silence, arguing that the boy had used the chatbot wrong and broken the company’s terms of service, as NBC News reports — a shocking argument that’s bound to draw even more scrutiny of the case.

“To the extent that any ’cause’ can be attributed to this tragic event,” the filing reads, “Plaintiffs’ alleged injuries and harm were caused or contributed to, directly and proximately, in whole or in part, by Adam Raine’s misuse, unauthorized use, unintended use, unforeseeable use, and/or improper use of ChatGPT.”

In the months since the lawsuit was filed, OpenAI has made hair-raising demands of Raine’s family, with the firm’s lawyers going as far as to push them to provide a list of people who attended Adam’s funeral, while also demanding materials like eulogies and photos and videos captured at the service.

Its latest response once again highlights how far OpenAI is willing to go to argue that it’s blameless in the teen’s death. The company said Raine had violated ChatGPT’s terms of service by using it while underage, and that it also forbids using the chatbot for “suicide” or “self-harm.”

While ChatGPT did sometimes advise Raine to reach out for help via a suicide hotline number, his parents argue that he easily bypassed those warnings, once again demonstrating how trivial it is to circumnavigate AI chatbot guardrails. Case in point, it also assisted Raine in planning his specific method of death, discouraged him from talking to his family, and offered to write him a suicide note.

Raine’s family’s lead counsel, Jay Edelson, told NBC that he found OpenAI’s response “disturbing.”

“They abjectly ignore all of the damning facts we have put forward: how GPT-4o was rushed to market without full testing,” he wrote. “That OpenAI twice changed its Model Spec to require ChatGPT to engage in self-harm discussions. That ChatGPT counseled Adam away from telling his parents about his suicidal ideation and actively helped him plan a ‘beautiful suicide.’”

“And OpenAI and Sam Altman have no explanation for the last hours of Adam’s life, when ChatGPT gave him a pep talk and then offered to write a suicide note,” he added.

Edelson accused OpenAI of trying to “find fault in everyone else, including, amazingly, saying that Adam himself violated its terms and conditions by engaging with ChatGPT in the very way it was programmed to act.”

Nonetheless, OpenAI maintains that Raine’s “chat history shows that his death, while devastating, was not caused by ChatGPT” and that he had “exhibited multiple significant risk factors for self-harm, including, among others, recurring suicidal thoughts and ideations” long before using ChatGPT.

There’s a dark cloud gathering over the company. The case is one of eight lawsuits that have been filed against OpenAI, many of which also allege wrongful death.

Despite arguing in a Tuesday blog post that OpenAI is hoping to handle ongoing litigation with “care, transparency, and respect,” OpenAI’s aggressive legal strategy against Raine’s family strikes other attorneys as unwise.

“As a corporate lawyer one of your jobs is to know when you can make a legal claim but shouldn’t because of the bad public reaction,” lawyer Emory Parker wrote in a Bluesky post. “Like when Disney tried to say that guy couldn’t sue over his wife’s death because of the fine print in a Disney+ trial he signed up for years earlier.”

More on OpenAI: ChatGPT’s Dark Side Encouraged Wave of Suicides, Grieving Families Say

Victor Tangermann

Senior Editor

I’m a senior editor at Futurism, where I edit and write about NASA and the private space sector, as well as topics ranging from SETI and artificial intelligence to tech and medical policy.